THE NEXT FIVE

THE NEXT FIVE - EPISODE 16

The Future of AI in Healthcare

Experts discuss how advancements in AI are set to transform the healthcare sector in the next 5 years

The Next Five is the FT’s partner-supported podcast, exploring the future of industries through expert insights and thought-provoking discussions with host, Tom Parker. Each episode brings together leading voices to analyse the trends, innovations, challenges and opportunities shaping the next five years in business, geo politics, technology, health and lifestyle.

Featured in this episode:

Tom Parker

Executive Producer & Presenter

Dr Alan Karthikesalingam

Senior Staff Clinician Scientist and Research Lead at Google

Greg Sorensen

Lead at Aidence

Inma Martinez

Chair of the multi expert group at the global partnership on AI

The advent and speed of advancement in AI has far reaching consequences for multiple industries. This five-part miniseries will spotlight various industry sectors where AI has a significant and growing impact, with this particular episode centering on AI's role in healthcare.

AI in healthcare offers life changing benefits as well as raising far reaching concerns. In the medical arena, various AI programmes like Large Language Models and Foundation Models are being used in many specialities, both in research and clinically. AI’s ability to rapidly process vast amounts of data and identify subtle patterns affords unrivalled potential within medicine. It can also help save money by streamlining processes. But there are risks. As the technology advances so do concerns over inaccurate diagnoses that could exacerbate health inequalities that already exist in the system. Another area of particular focus is the transparency and trust of the AI models being built. This is where the importance of regulation comes in. We speak with Dr Alan Karthikesalingam, Senior Staff Clinician Scientist and Research Lead at Google who offers his insight into the research and clinical applications of AI in healthcare. Greg Sorensen, Lead at Aidence, shows how AI is being used in the clinical screening of lung cancer, a key prevention tool that is already saving lives. Inma Martinez, chair of the multi expert group at the global partnership on AI addresses the importance of regulation and governance of AI in healthcare and beyond.

Our Sources for the show: FT Resources, CEPR, European Parliament research, CSET, BMJ, KCL.

READ TRANSCRIPT

- Tech

- Health

Transcript

The Future of AI in Healthcare

Soundbites:

TOM: 10:00 Advances of AI in healthcare could be a gamechanger

Alan Karthikesalingam (01:01.)

it has this amazing potential to improve the accuracy and availability and accessibility of expertise in healthcare.

TOM: and if used in diagnostics it could save lives

Guest Greg (02:59.55)

Lung cancer is still the single biggest killer of all cancers. And the most effective thing we can do to fight lung cancer is screening.

TOM: But it needs regulation

Inma Martinez (00:42.46)

The primary asset to protect in healthcare is patient data. And that is the bloodstream of every AI system that develops anything for healthcare.

TOM

I'm Tom Parker, and welcome to the next five podcast brought to you by the FT partner studio. In this series, we ask industry experts about how their world will change in the next five years, and the impact it will have on our day to day. We’re taking a break from the norm with this episode, instead kicking off a special 5 part mini series where we take a deep dive into the world of AI. Each episode will focus on an industry sector where AI is having and is set to have a big impact.

In this episode we’re tackling AI in healthcare, looking at the benefits, limitations, challenges and of course what the next five years will bring.

MUSIC FADE

TOM AI has various definitions and acronyms associated, Generative AI is an umbrella term for systems that generate content from images, audio, code and language. This is where another well known term is associated. LLMs or Large Language Models are a type of programme that works with language, think ChatGPT or Google’s PaLM. Lastly Foundation AI is a term that refers to systems that have broad applications, Large Language models are often synonymously linked with foundation AI as LLMs have far reaching capabilities that can be adapted to specific purposes. In the medical arena, specific LLMs and Foundation models offer unrivalled potential in many specialities, both clinically and in research.

Alan Karthikesalingam (00:56.114)

So Tom, I think the benefits of AI in healthcare are really that it has this amazing potential to improve the accuracy and availability and accessibility of expertise in healthcare.

Tom: This is Dr Alan Karthikesalingam, Senior Staff Clinician Scientist and Research Lead, Google

The ways we can imagine that happening are both by the assistance of those who are involved in care, imagine giving caregivers superpowers, but also potentially directly helping everyday consumers with everything from access to information to empowering them with what they need to make the best decisions.

Alan Karthikesalingam (08:58.35)

7:08 So one of the frontiers we're exploring

09:01 at Google is in foundation models and frontier models generally in AI. And we've been exploring that particularly in healthcare with a research breakthrough called MedPalm. This was a large language model that we published and shared with the world in December of last year

I was very lucky to have been the joint senior author of that work, and that was the first AI system that was ever demonstrated to be capable of reaching a pass mark on the kinds of questions you get in medical licensing exams. But what we were doing there really was exploring whether these large language models can be tuned to be reliable answerers of questions that you get in healthcare. And we showed that across a wide breadth of questions with this MedPalm system.

that the AI was able to reliably answer the sorts of questions that consumers ask of Google, but also very technical questions that really test and probe its ability to recall medical facts and knowledge accurately.

So for a system like MedPalm, it's much newer technology. That kind of technology is not yet.

mature enough or ready enough for use in explicitly diagnostic applications.

Other examples which are maybe closer to the clinical practice of medicine are to do really with diagnostic expertise and trying to make tools that are useful and helpful to caregivers.

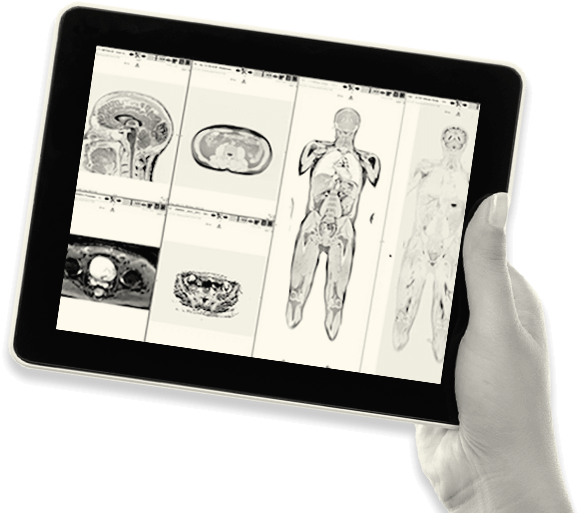

Just one example is in the role of medical imaging,which plays a really important role in the diagnosis and treatment of many diseases. Imagine tools that can help radiologists or ophthalmologists more quickly review an image or help identify which images are the most urgent and pressing for their attention. And there are now many examples, even with randomized trials coming through, of how AI tools are doing exactly that.

TOM: 12:30 Helping radiologists and ophthalmologists review images quickly and accurately have clear benefits. But to get the best results partnerships are key. Google has partnered with experts in clinical screening - sharing technological advances to get AI to clinicians and patients quicker.

So we do a lot of, imaging, essentially, MRI, CTs, mammograms, x-rays, but only in the outpatient setting.

This is Greg Sorensen who oversees Aidence, A Netherlands based AI company involved in clinical lung cancer screening.

And AIDens already had its own AI and in partnership with Google is now trying to elevate that to the next level to help improve lung cancer screening.

Greg

Lung cancer is still the single biggest killer of all cancers. It is probably four times bigger than breast cancer, and the same thing four times bigger in terms of the number of people killed every year as prostate cancer. And the most effective thing we can do to fight lung cancer is screening.

Greg

When I started medical school, 40 years ago, if 100 people got lung cancer, within five years about 85 of them would die.

And with all of the recent therapies and all the immunotherapies and all the great chemotherapy, you can move that number from about 85 down to in the high 70s, unfortunately. So it's not that great, all the chemotherapies we have. And that's because lung cancer is typically found at a late stage. You don't get symptoms until it's spread. With screening, that number is down in the 60s, the low 60s. So lung cancer screening has a much bigger impact, positive impact, than all of the treatment things. And that's of course because most lung cancer is found when it's already spread. But with screening, we can find it when it's still a very early stage, and when it's early stage, it's less expensive to treat, it's easier on the patient to treat, and most importantly, it can be actually curative. If you find lung cancer when it's still small and hasn't spread, you can actually be cured of lung cancer. So that's the key to all cancers, is to find them early when they're still small and treatable.

TOM:12:59 Saving lives comes in many forms, time and money is another invaluable saving that AI can offer. A May 2023 report published by Mckinsey authors for CEPR (Centre for Economic Research) shows the economic savings AI, at its current level, can afford the healthcare industry over the next five years; $200-360 billion dollars annually or 5-10% of spending.

Greg

Because the economics of doing lung cancer screening are so compelling, it's not only great for the patients, but if you're, say, the NHS, it actually saves you a ton of money to catch those cancers early, and that means you can treat more patients for the same cost. So from a population health point of view, screening makes a lot of sense. The challenge is that screening is a little bit tedious, kind of by definition, these are people who don't have anything wrong with them, or in some cases you find a nodule incidentally. There's an incidental pulmonary nodule. And so what Adens developed was a program they called VI for VI lung nodules, a sort of a visual eye that could help the physician find these nodules. So it will pick up the nodules and then it will quantify them and in the lung cancer field there are guidelines that say well

If a nodule is kind of this shape and of this size, then there are criteria and next steps that you follow that can be programmed into a software or that can kind of be guided to the physician's next step. And so what ViLungNodules does is it helps identify those nodules, measures them, and then can plug them into a separate program we called ViClinic that helps the doctors manage patients, sends out a reminder saying, hey, it's been three months or six months, or here's what the guidelines say what you should do next with this patient. The measurement and the management turns out to be a really critical thing. And I would say we've seen this in other domains. The pixel AI is very much a wonderful new thing. The AI can analyze images and identify abnormalities and measure things in a wonderful way that's new, that wasn't possible a decade ago.

TOM:13:31 It’s true 10 years ago AI wasn’t quite up to scratch compared to humans. Machine models only correctly recognised images (normal, non medical images) 70% of the time, humans 95%. Only 5 years later, improved algorithms, better access to larger data sets and faster processors meant machines hit 96% accuracy. And yes we’re still at 95% now. Today, AI models are analysing medical images like MRIs, CT scans and Xrays with the same accuracy as clinicians, but only in certain use cases and qualified medical professional expertise is still required to work in tandem. AI’s interaction with medical professionals is one of constant debate. Fears that AI will be relied upon too much by doctors, or deskill them are apparent, Curtis Langlotz, MD, PhD, Director of the Center for Artificial Intelligence in Medicine and Imaging said “Artificial Intelligence will not replace radiologists…but radiologists who use AI will replace radiologists who don't” Perhaps, like other industries where AI is being integrated, the importance is around reskilling of the human system.

Greg

So in a very controlled setting, the AI is better. But life is not controlled.

That's the challenge with today's AI. It's not yet robust enough or general purpose enough that it can take all that information and incorporate it the way we humans can. We know about the patient's history. We know what their family history might be. We know when they were last here. We know, for example, if they feel pain or if they've got a lump or something like that. The AI doesn't know any of that. It's just given the pixels. If you remove all of that information from the human as well and you just force the human to do that small task the AI can do, then the AI actually does outperform the humans. It is on average better than the humans. But as I said, that's not the real world. That's not how medicine is practiced. And so that's why AI is still a tool to be used alongside humans.

Greg

I'm old enough that when MRI came out, it was still called NMR. And the joke amongst us radiologists was the fear that NMR really stood for no more radiologists. That it was going to be so easy to read an MRI that the neurologist and neurosurgeon wouldn't need us and that we'd be obsolete. And in fact, the complete opposite happened. It made us more invaluable than ever.

Because we were essentially able to reskill , as you said, and learn about this new technology and take it to new levels. And I think the same thing will happen with AI. There's this sense that, you know, we're not going to be needed anymore as radiologists. I think it's actually just the opposite, that as we harness these technologies, we will indeed adapt and be able to do the things we know need to be done for healthcare.

12:29

TOM NEW VO: 00:10 Alan what’s your view on this?

Alan Karthikesalingam

Um, I would say that overall, you know, since time immemorial, I mean, medicine has always been a profession that has evolved to use and embrace new tools. And it sometimes takes time to find the best ways that expert caregivers can use those tools to basically, you know, maximally benefit their patients. If you think about, you know, when the thermometer was first introduced, it was probably a little bit controversial and it wasn't immediately adopted by everyone, but gradually over time, it found its place and eventually doctors who used thermometers

you know, outnumbered those who didn't. And I think with some types of technologies like AI and medicine, there may be a very similar thing that gradually and carefully and thoughtfully, the right place for this sort of technology has to be found, but that is usually something that's actually led by the experts who provide care to begin with. And so, you know, with rigorous, careful and thoughtful evaluation, it tends to be possible for these caregivers to find ways in which that technology is useful. And I think I would therefore look at this much more as a reskilling or a kind of an assistive tool really for expert caregivers rather than anything that you know seeks to replace human effort.

TOM: There are a number of challenges that exist around AI adoption in Healthcare. Some of it is around implementation that could continue to slow the rate of AI advancement. But, as the technology advances so do concerns over inaccurate diagnoses that could exacerbate health inequalities that already exist in the system ( for example, images used to train AI algorithms for melanoma detection are predominantly, if not entirely, caucasian).

Greg

Yeah, this is a big challenge and the melanoma example is a great one because what the melanoma example helped us realize is the AI definitely is trained on Caucasian skin and therefore is biased but the reason that was the case is because all the textbooks were written that way. If you go back and look at all the textbooks, they're all filled with pictures which is what was used to train the AI of white people's skin.

Greg

And we're starting to see that just like human care has challenges, the AI sometimes has challenges. If the AI is only trained, say, on a certain race or a certain age, then when you bring that tool to a different race or a different age group, sometimes the AI doesn't work. Well, it turns out that happens in real life, but we often don't like to talk about it. I mean, there are many articles that have identified that there's plenty of racism in healthcare delivery, completely separate from AI. What frustrates everyone is that no one wants to codify that or make it permanent in AI. And now that we can do things reproducibly, we can identify it and reduce it. And I think it's actually a good thing. We're holding the AI to a higher standard than we typically hold our average practitioner to. And that actually is raising the bar for all of us in healthcare. If we're gonna make AI that never falls asleep on the job and is not racist and is widely available, and is consistent. Well, that puts more pressure on us humans to make sure we're not doing any of those things either, all of us have these same shortcomings. But now that we can scrutinise AI, we can work and we have worked to try to reduce those biases. And I think it is working.

TOM: The idea that limitations in healthcare at this brittle stage could cause harm goes against the hippocratic oath "Primum non nocere" - "First do no harm". So in short, can you programme the hippocratic oath into AI?

Alan Karthikesalingam (21:47.01)

I think there are benefits and risks to the uses of any technology and tools in healthcare. And there's usually two sides to any intervention or technology that could theoretically be beneficial, usually also has an accompanying potential for harm. And

I think there are many ways to think about how to mitigate that risk and I think it has to be done at every stage of the technology's life cycle. So thinking about unintended adverse consequences, overtly possible but also potentially difficult to imagine harms is really important from the very beginning when research is first being done because then you can think about those and build them into the way that the systems are trained and evaluated.

There's also a really important phase in moving from early stage research through to clinical implementation actual use. You can think of this as the journey from code to clinic or from bench to bedside. That's so common for so many types of technology in health care. And at each stage of moving through those cycles, it's really important to build in a series of safeguards and careful assessments of safety at every stage.

And a lot of this also can be helped by thinking about safe ways in which to deploy the technology safe way safe intended uses for it. So a good example there, as I mentioned with MedPalm, would be for such a new technology. It's much lower risk to start using it in settings that don't involve decisions around diagnosis or treatment, for example, that immediately lowers the potential for lots of harm. Similarly, using new technology in ways that have experienced an expert oversight is another way that can help mitigate against the risk of harm.

Alan Karthikesalingam (24:03.474)

And then a third principle that's very important is careful and safe measurement. Finally, you know, we're also very lucky, particularly in countries like the UK, that there are very mature regulatory frameworks around the use of technology in healthcare. And those are also very helpful.

REPLACE WITH TOM NEW VO: 00:26 Regulation is of paramount importance. One area of particular focus is the transparency and trust of the AI models being built.

Inma Martinez

We have priorities in regulation.

TOM: This is Inma Martinez, chair of the multi expert group at the global partnership on AI which is a multi-government initiative within the AI community.

Inma Martinez And when I say we, I mean basically from the European Commission to the British government to the World Health Organization, the GPay. And the most important one is that if we use technology and AI, it's got to be done to promote human wellbeing. This is what we say to improve the patient experience. And therefore, we need to assure human safety. We have to do it because it's of public interest. And then the systems that we use are developed responsibly with accountability because they cannot be black boxes that nobody knows how they function.

19:16

TOM NEW VO: 00:38 For AI to work best, data is king. But when it comes to health extra care needs to be taken around safety, data privacy, confidentiality and protection for patients and citizens.

Inma Martinez

The primary asset to protect in healthcare is patient data.

Inma Martinez And that is the bloodstream of every AI system that develops anything for healthcare. So it's incredibly valuable. It's important that if we digitize patient data, it's treated with governance, it's treated with accuracy, and that

when an AI system is going to use it to detect diseases or predict the evolution of diseases, etc. We have assurance that this data is treated in a way that people have been treated with inclusiveness, with equity, that we ensure that whichever AI systems are deployed can be transparent, meaning that...They can explain themselves as to how they operate and reach conclusions, especially in diagnosis. So that's the framework. And of course, it's an incredibly fragile and fragmented world, and governments put regulation where protection is needed.

Inma Martinez: So we do not want systems to operate in black boxes that nobody can challenge. That is especially one of the objectives of the European Union's AI directive, no black boxes.

20:36

TOM NEW VO: 00:57 Another challenge is how lawmakers can find the right balance between offering the freedom to developers to innovate, but also regulate properly to protect the public?

Inma Martinez

Well, I'll share something with you from the latest conversations that we're having in the Global Partnership on AI and at the United Nations. So far, governments and society in general have been forced to make AI safe

Okay, what about if we started to tell AI developing companies that they needed to deliver AI safe? AI safe from inception. Do not give us unsafe AI that then we need to start thinking how we're going to deal with this thing. Just like any other industry, the automotive industry for 70 years didn't think that had to be safe. Governments didn't put that objective into the industry. Safety.

In 71 they did. Okay? Everything. Why we have level 5 automation cars that will eventually go through the roads is because of safety. In AI it's the same. We will ask companies to ensure that the way that the algorithms are trained, that the data sets that are used are according to what we need them to be with assurance. And then, that when that AI is commercialized...that the functionality, the usability, the convenience of using that is in the public interest, is really delivering a benefit higher and above what the humans can do. It will not supplant the humans just because. Because one of the objectives of all governments and organizations working now on AI directives is that humanity still has a very long and prosperous roadmap towards our splendor and cognition.

Well, we will not put AI to become a wall for us not to continue expanding our cognition and achieving what our civilization seems to be going towards.

more ethical humans with more empathy, creating amazing things. The future is creativity. We're not going to stop it.

TOM: So what will the next five years bring?

Alan Karthikesalingam (27:07.242)

It's such an amazing moment in AI that, you know, the progress we've seen in the past year has been so rapid that I think projecting forward even five months feels like quite an exciting thing to try and do. And in five years, you know, potentially that we could see some really transformative advances in AI technology.

One thing we'll definitely see is that health moves at the speed of trust. And that's a speed that is necessarily, you know, a bit slower and much more sort of thoughtful than the speed at which research in AI itself progresses, of course. So if you look at a five year horizon, I would imagine that many of the studies that involve clinical experts deploying and using AI in real workflows will be published in this year, next year and the year after. And that will allow time for caregivers, healthcare systems to actually scale up the way in which these tools are used. So that's one thing I would expect is that AI will continue to find its right place into many clinical workflows where it's known to be safe and effective and helpful to people.

Alan Karthikesalingam

You know It would be amazing if over the next five years we can point to AI really giving back clinicians and patients the gift of time. That would be a fantastic thing.

I think what we've been talking about today around how health and wellbeing is a core need for humanity and we have substantial challenges, if you think of a global picture, around billions of people having access to this kind of expertise quickly, equitably, and in a timely way when they need it. And I think short of being able to somehow

Alan Karthikesalingam

10x or 100x the number of clinical experts and, you know, putting a clinical expert on the doorstep of absolutely every citizen on the planet doesn't feel immediately tractable. And so finding ways that we could use technology to scale up access to this kind of expertise feels a really noble and important mission.

Inma Martinez

We will definitely put huge work and effort into data governance. We need to ensure that biometrics that are used, for example, for identification and for privacy, they are really maintained, under control, and that AI is not widely used in cases where AI potentially fails.

Inma Martinez

And in the last years of my career, I have worked more and more with governments and with big institutions because I believe that AI has an enormous potential to create a huge splendor and progress that is not just economic but is really a societal welfare. We can care for people better, we can make life less effortless and then what we need to do is ensure that it is done for the highest benefit of everyone concerned. And if I have to see it in millions of meetings speaking as to what can be done and what cannot and should not, that's what makes me work harder every day. And my relief, and I'll share this with you sincerely, I've never seen governments and institutions more focused in making sure that AI is a force for good.

They learned their lessons with the unruly and lawful internet and now they're not going to let that happen again to society and the most vulnerable. So I think I sleep better at night and I have more hope for the future.

Greg

Well, I mean, it's a quote attributed to Bill Gates. I think that we're much worse than we think at predicting the next five years. We underpredict and we overpredict what's going to happen in the next six months. And I'm sure I fall into that camp. It's very difficult to see what's going to happen that far out. For sure, what I hope happens is that AI becomes more widely available and that we find a sustainable way to deploy it.

What I'd really like all of us in the field to focus on is building AI and developing and deploying AI that really attacks important problems. Can we find not just cancer earlier, but the dangerous cancers earlier?

and can we build AI in a way that makes it available to everyone. We're building AI, but one of the key challenges is can we build it sustainably.

That's what I hope we can do. I hope we can build AI that really makes a meaningful difference in people's lives. And we're starting to see that.

Greg

The idea of distilling down centuries or at least decades of expertise into a piece of software and then embodying that and deploying that, that's still a really cool vision and I think that's very possible. Will we be able to do that in the next five years? I hope so. I think we will for certain diagnostic areas.

I was just in India last week. We met with the government. The idea that we could embody mammography AI and bring into a mammogram machine

and bring it to the literally hundreds of millions of women who are not being screened for breast cancer, even though two-thirds of cancer deaths in women are preventable.

and they're not being prevented. But if we could build AI that was good enough to find breast cancer early, put it in an inexpensive mammogram machine, train the healthcare worker to deploy this thing in the field, we would be saving hundreds of thousands of lives. I mean, not overnight, but you can see a line of sight to that in a way that,

Even the coolest pharmaceutical would take decades to develop and deploy. And with AI, the opportunity to have impact at scale is just unparalleled. And so that's why I'm spending my time doing it. I can't see a thing that can have more impact to help people faster than this field right now. It's a super exciting time to be working in this field.

TOM OUTRO: AI in healthcare offers life changing benefits, from transforming detection and diagnosis in multiple disciplines, to increasing the efficiency of clinicians and optimising the allocation of resources, both technical and human. Detecting illnesses early is the difference between life and death. As the saying goes, prevention is better than cure.

But there are risks; social, ethical and, within this sector, clinical. Potential errors could cause patient harm, built in bias and an increased health inequality, which weigh heavily on some and not others, while increased concern over data privacy and security urge immediate discussion over transparency and trust of systems. Regulatory frameworks and codes of practice under discussion across various industries need to be extended to take into account healthcare specific nuances.

At the cutting edge of science and technology sits a tightrope, one that multiple industries and governments must walk across with expert care, balancing the desire for a better, healthier and happier world, with the potential to do more harm than good. It could be argued that no tighter rope needs to be trod than AI in healthcare.